Benchmarking a Video Codec: MJPEG on Canon 5D Mark IV

When Canon announced their new 5D model, the Mark IV, there was a lot of controversy about its 4K recording capabilities.

One of the things that are subject to discussions is Canon’s questionable choice of codec (4k recording only in MJPEG). I wanted to approach this problem from a semi-scientific standpoint and evaluate the codec’s quality in objective numbers. My aim was to compare natively encoded files from the camera and encode them into mp4/H.264 videos in different bitrates and try to find out, how much bitrate is needed in order to not degrade the image quality of the original files.

I created a video that aggregates the main results of my research, it is available on YouTube.

Methodology

My approach was encoding a MJPEG master into H.264 files with different bitrates and comparing video quality indicators. In order to generate key metrics I used VQMT, which is able to determine purely technical metrics like the PSNR (Peak Signal-To-Noise Ratio), but also human vision model based indicators like the MS-SSIM (Multi-Scale Structural Similarity).

First off, I created a shell script, which takes a master file and instruments the tools for converting and analysis. It generates files encoded with 30, 60, 100, 200, 300, 400, 500 and 600 Mbit/s using ffmpeg and libx264. The specified bitrate is applied as an average bitrate.

VQMT produces a CSV file with the results of the individual methods; every frame in the video has a value. For the sake of completeness I used all available methods for the benchmark: PSNR, SSIM, MS-SSIM, VIFp, PSNR-HVS and PSNR-HVS-M. More information on some of the methods is available in the references section.

Testing material

I filmed five clips in 4k mode, the selected color profile is “VisionColor”, which relatively flat. The selected frame rate was 25 fps. I selected five different motives in order to have a wide range of material:

- almost completely still clip of a burning candle

- a panning move, with a wide color range and moderate movement

- tree with leaves quickly moving in the wind

- mixed frame, with trees and cars moving in the street

- moving face

The video files are viewable in the uploaded YouTube video.

Processing

The master files were originally around 30 seconds long. Unfortunately analyzing such clips needs a lot of time: Because of the VQMT’s single-threaded nature a 30 second clip needs around 50 minutes of processing time on my machine. As VQMT can only process raw YUV files, all files (master files and their re-encoded clips) need to be converted first. Fortunately ffmpeg is able to do this in a lossless manner. One note: Raw YUV files are large, five seconds of video result need 2.2 GB of space. Because of those constraints I left scene 2 in its original length while I trimmed all others down to 5 seconds.

Data Evaluation

In order to process and visualize the results I used Jupyter, which is an interactive code editor that is very useful for data analysis. The results of the specified videofile are loaded and plotted by metric.

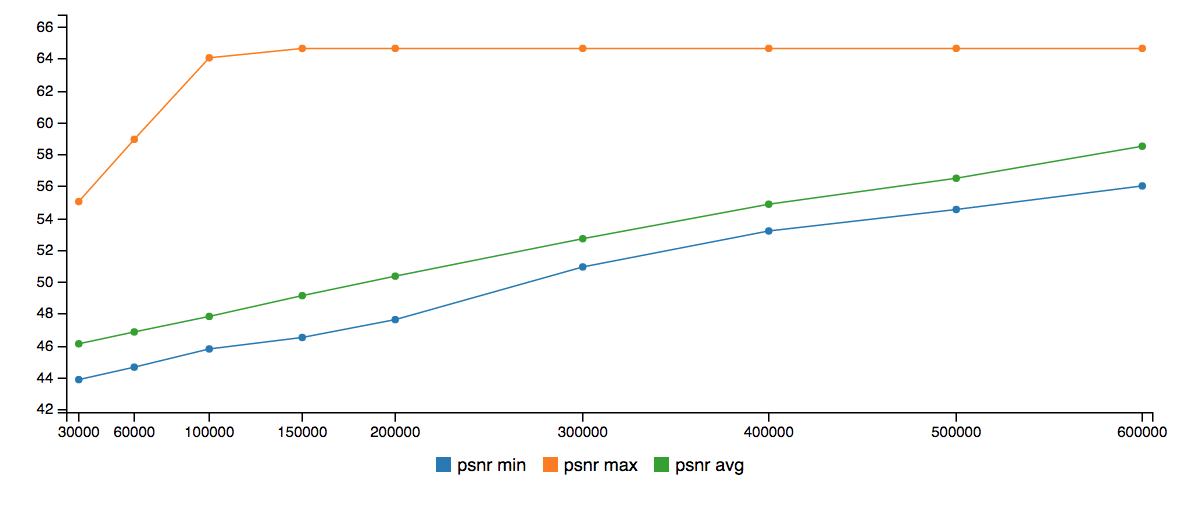

For every bitrate the average value is plotted as well as the worst encoded frame and the best performing frame.

Full results

Necessary Bit Rates

PSNR

There is a very similar pattern in all the videos: The best encoded frame has a PSNR of around 65, which doesn’t improve with more than 150 Mbit/s. The average doesn’t stop increasing, even between 500 and 600 Mbit/s. One possibility is that MJPEG has very specific artifacts, that H.264 isn’t reproducing so accurately, so probably a much higher bitrate would be necessary. This probably would not lead to better picture quality, though.

Bit Rate necessary for average PSNR ≥ 50

| Video 1 (still) | 200 Mbit/s |

| Video 2 (pan) | 350 Mbit/s |

| Video 3 (leaves) | 600 Mbit/s |

| Video 4 (mixed) | 300 Mbit/s |

| Video 5 (face) | 500 Mbit/s |

MS-SSIM

The worst performing videos are 3 (leaves) and 5 (face), which is not very surprising: 3 has a lot of movement which is hard to reproduce for H.264, same for video 5, which has a higher noise pattern due to a large ISO setting.

Video 2 (pan) is rather easy to encode, because the human vision system can’t track structure that easily if the frame itself is constantly moving. This is reflected in the low bit rate.

Bit Rate necessary for average MS-SSIM ≥ 0.995

| Video 1 (still) | 100 Mbit/s |

| Video 2 (pan) | 60 Mbit/s |

| Video 3 (leaves) | 200 Mbit/s |

| Video 4 (mixed) | 150 Mbit/s |

| Video 5 (face) | 300 Mbit/s |

Scenes with panning turned out to be much less complex than expected: Those would need much less for a great visual quality.

VIFp

Bit Rate necessary for average VIFp:

| ≥ 0.8 | ≥ 0.9 | |

|---|---|---|

| Video 1 (still) | 300 Mbit/s | 500 Mbit/s |

| Video 2 (pan) | 100 Mbit/s | 250 Mbit/s |

| Video 3 (leaves) | 300 Mbit/s | 400 Mbit/s |

| Video 4 (mixed) | 100 Mbit/s | 300 Mbit/s |

| Video 5 (face) | 400 Mbit/s | 500 Mbit/s |

Conclusion

The answer to codec choice is: It depends highly on the source and the destination. For human consumption the data rates do not have to be very high. The MS-SSIM is above 0.9 even for 30 Mbit/s on all tested clips.

For post-processing the H.264 bit rate should be a lot higher: For a high visual fidelity even 500 Mbit/s might be necessary, which is incidentally the same bit rate as the MJPEG master material.

All in all, it is clear that the bit rate is way too high for customer delivery, which is good, because most material will be post-processed first and it seems that there is plenty of room for that.

It seems that Canon’s decision wasn’t so crazy after all.

Source code

- Script for converting a master file into multiple H.264 encoded clips

- Jupyter Notebook for parsing CSV files and plotting them